Author: Paul Campos

Here's a very interesting essay (gift link) on how three forms of gambling -- sports, prediction markets, and financial markets -- are merging on the smart phones of, in particular,.

In the last few months I've encountered three very distinguished academics who experienced significant cognitive decline very close to their 80th birthdays. One involved a formal diagnosis of dementia, another.

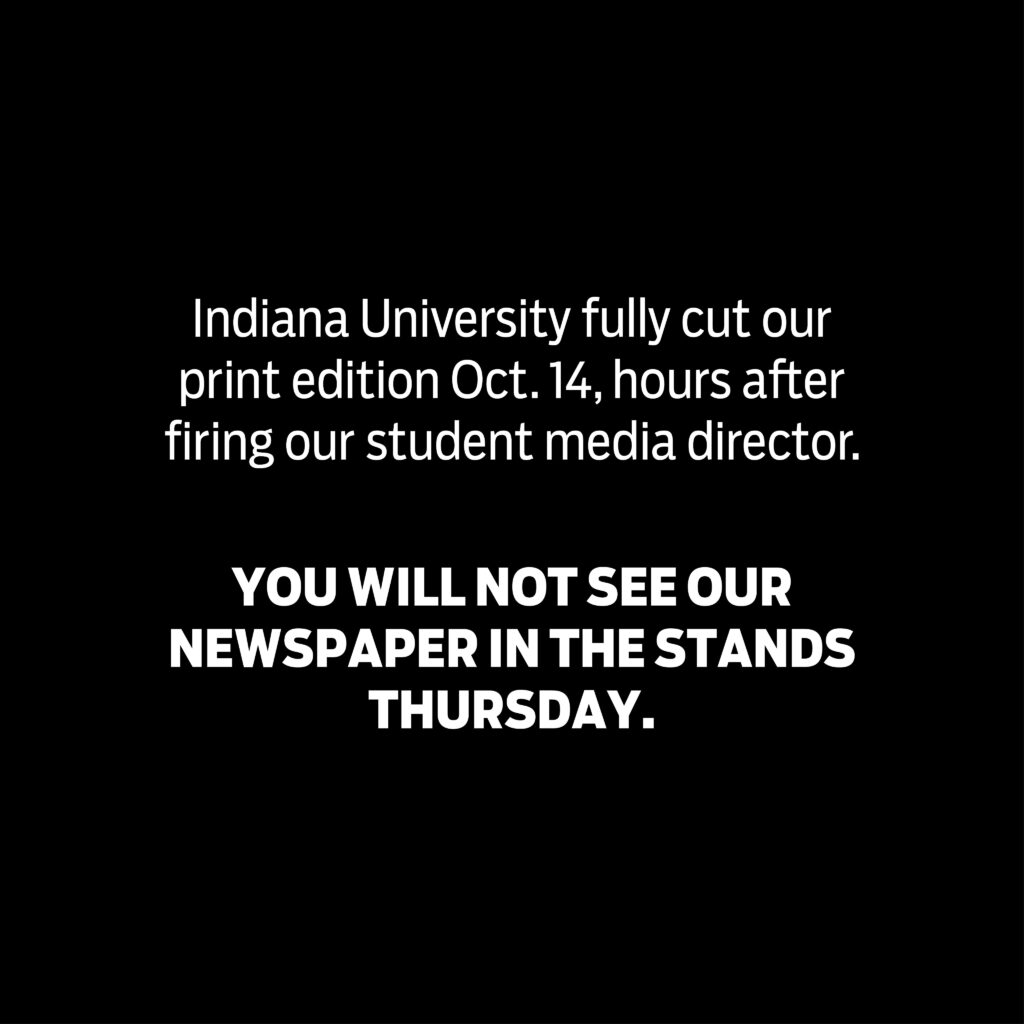

The world is changing: Now that's doing politics the right way. Meanwhile this happened at what is by far the most right wing of the service academies: A board of.

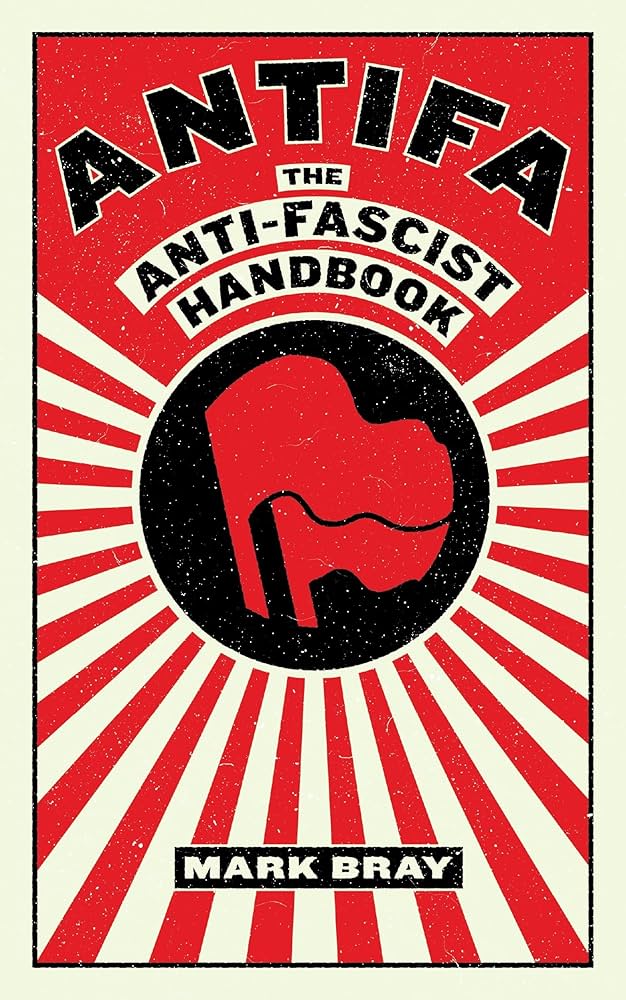

MARTIN: Mark Bray is an historian who teaches at Rutgers University. Back in 2017, he published a book titled "Antifa: The Anti-Fascist Handbook." It's meant to educate people about anti-fascism.

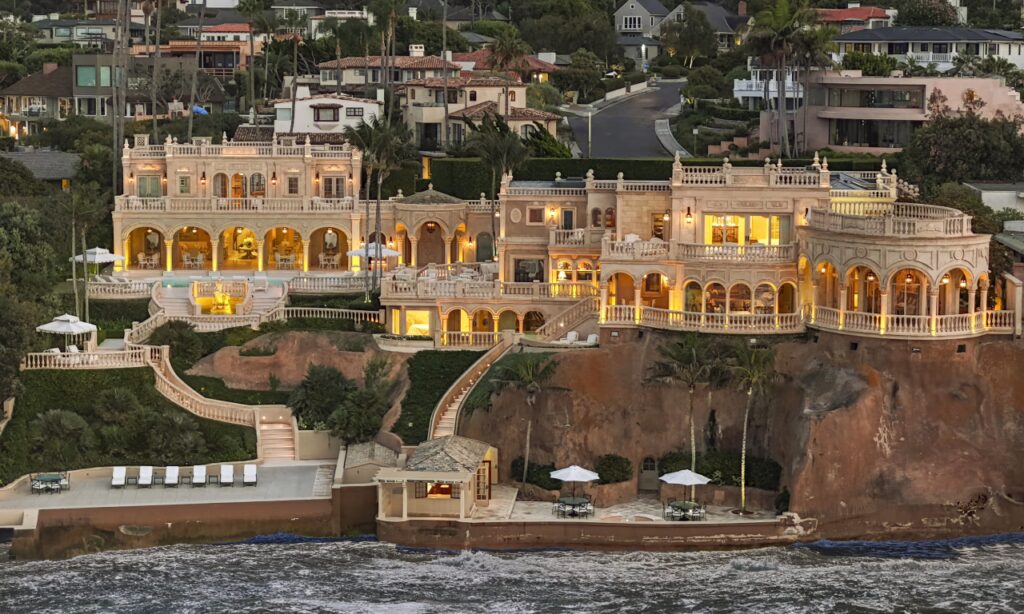

A lot of people remarked in yesterday's thread about the sources of right wing revanchism that anxiety and dread about economic precarity is a central fact of life for so.

In addition to what Cheryl noted below, the head of the JAG abruptly retired effective today. She -- yes, one of those people -- is/was technically the. Deputy Judge Advocate.

A commenter asked this question yesterday, and since this wasn't covered in law school I asked someone who is very familiar with the military justice system. They told me the.

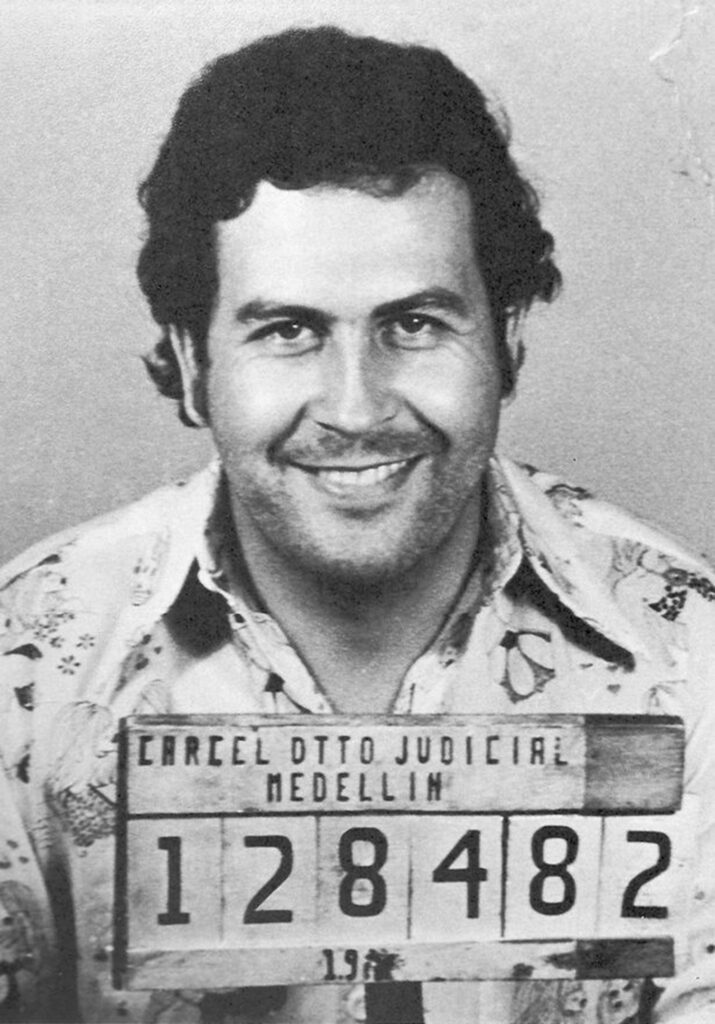

I want to follow up on this morning's post about the Trump regime's straightforward murder of suspected -- or more likely "suspected" -- drug smugglers. I think it's a mistake.