Corporate Funded Research’s Inherent Biases

It’s fascinating to be doing completely unfundable research in the modern university. It means you don’t matter to administration. At all. You are completely irrelevant. You add no value. This means almost all humanities people and a good number of social scientists, though by no means all. Because universities want those corporate dollars, you are encouraged to do whatever corporations want. Bring in that money. But why would we trust any research funded by corporate dollars? The profit motive makes the research inherently questionable. Like with the racism inherent in science and technology, all researchers bring their life experiences into their research. There is no “pure” research because there are no pure people. The questions we ask are influenced by our pasts and the world in which we grew up. The questions we ask are also influenced by the needs of the funder. And if the researcher goes ahead with findings that the funder doesn’t like, they are severely disciplined. That can be not winning the grants that keep you relevant at the university. Or if you actually work for the corporation, being fired.

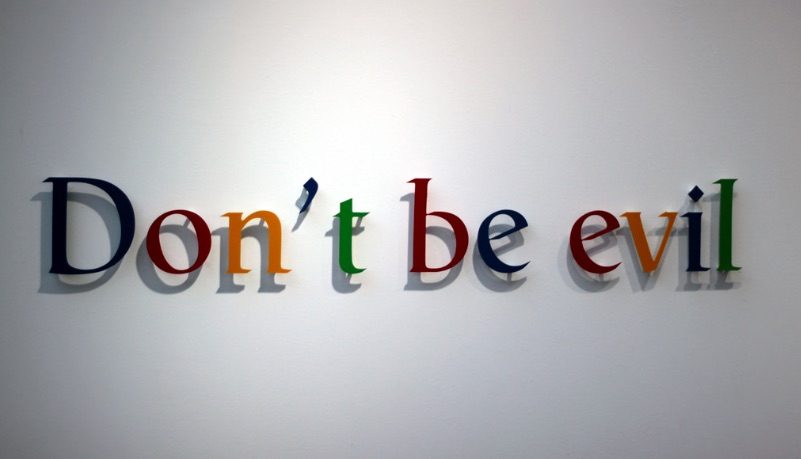

This leads us to the case of Timnit Gebru, fired from Google for noting how the company’s facial recognition technology enhanced racism in America. The issue here is that Google’s research on the impact of its own technologies is no different from the tobacco or oil industries research.

The story of what actually happened in the lead-up to Gebru’s exit from Google reveals a more tortured and complex backdrop. It’s the tale of a gifted engineer who was swept up in the AI revolution before she became one of its biggest critics, a refugee who worked her way to the center of the tech industry and became determined to reform it. It’s also about a company—the world’s fifth largest—trying to regain its equilibrium after four years of scandals, controversies, and mutinies, but doing so in ways that unbalanced the ship even further.

Beyond Google, the fate of Timnit Gebru lays bare something even larger: the tensions inherent in an industry’s efforts to research the downsides of its favorite technology. In traditional sectors such as chemicals or mining, researchers who study toxicity or pollution on the corporate dime are viewed skeptically by independent experts. But in the young realm of people studying the potential harms of AI, corporate researchers are central.

Gebru’s career mirrored the rapid rise of AI fairness research, and also some of its paradoxes. Almost as soon as the field sprang up, it quickly attracted eager support from giants like Google, which sponsored conferences, handed out grants, and hired the domain’s most prominent experts. Now Gebru’s sudden ejection made her and others wonder if this research, in its domesticated form, had always been doomed to a short leash. To researchers, it sent a dangerous message: AI is largely unregulated and only getting more powerful and ubiquitous, and insiders who are forthright in studying its social harms do so at the risk of exile.

Again, there is no pure research. There’s no pure science. There’s no pure technology. It takes a lot of bravery to try and be honest about this stuff when your job depends on it. The corporations will be sure it doesn’t happen again.